Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

You can’t build AI products like other products.

AI products are inherently non-deterministic, and you need to constantly negotiate the tradeoff between agency and control.

When teams don’t recognize these differences, their products face unexpected failures, they're stuck debugging large complicated systems they can’t trace, and user trust in the product quietly erodes.

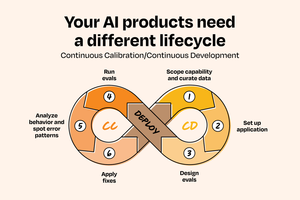

After seeing this pattern play out across 50+ AI implementations at companies including @OpenAI, @Google, @Amazon, and @Databricks, Aishwarya Naresh Reganti and Kiriti Badam developed a solution: the Continuous Calibration/Continuous Development (CC/CD) framework.

The name is a reference to Continuous Integration/Continuous Deployment (CI/CD), but, unlike its namesake, it’s meant for systems where behavior is non-deterministic and agency needs to be earned.

This framework shows you how to:

- Start with high-control, low-agency features

- Build eval systems that actually work

- Scale AI products without breaking user trust

It’s designed to recognize the uniqueness of AI systems and help you build more intentional, stable, and trustworthy AI products.

They're sharing it publicly for the first time:

99,57K

Johtavat

Rankkaus

Suosikit